I know many customers were waiting for the next release of VMware vSphere to realize the new capabilities and features. So there you go, Let’s check what’s new in vSphere 8!

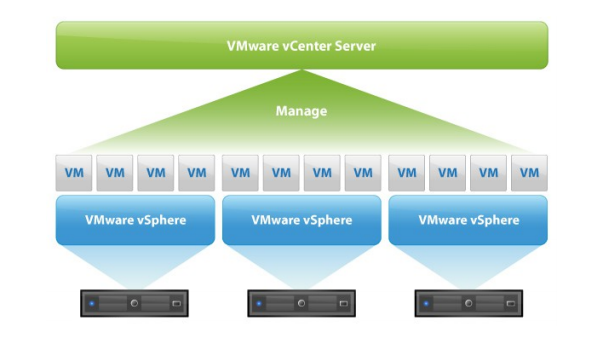

VMware vSphere is the base solution on which most private cloud datacenters are running on. As VMware defines, vSphere 8 is the enterprise workload platform that brings the benefits of the cloud to on-premises workloads, supercharges performance through DPUs and GPUs, and accelerates innovation with an enterprise-ready integrated Kubernetes runtime.

In this post, I want to introduce the new and unique features that I found useful and interesting in vSphere 8.0!