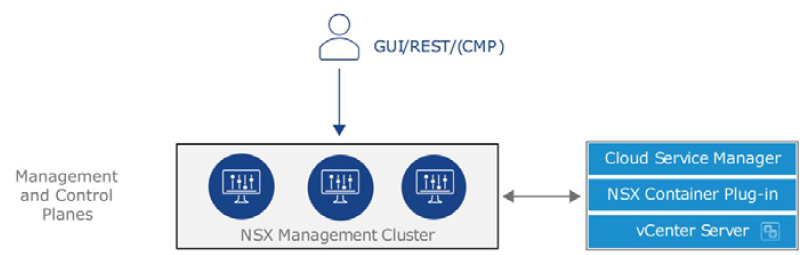

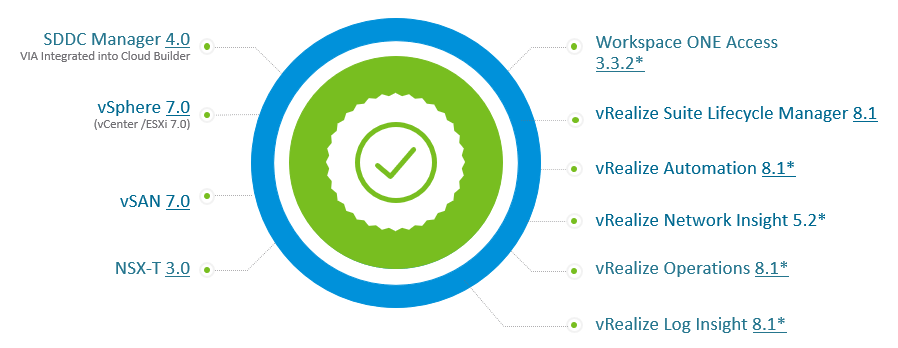

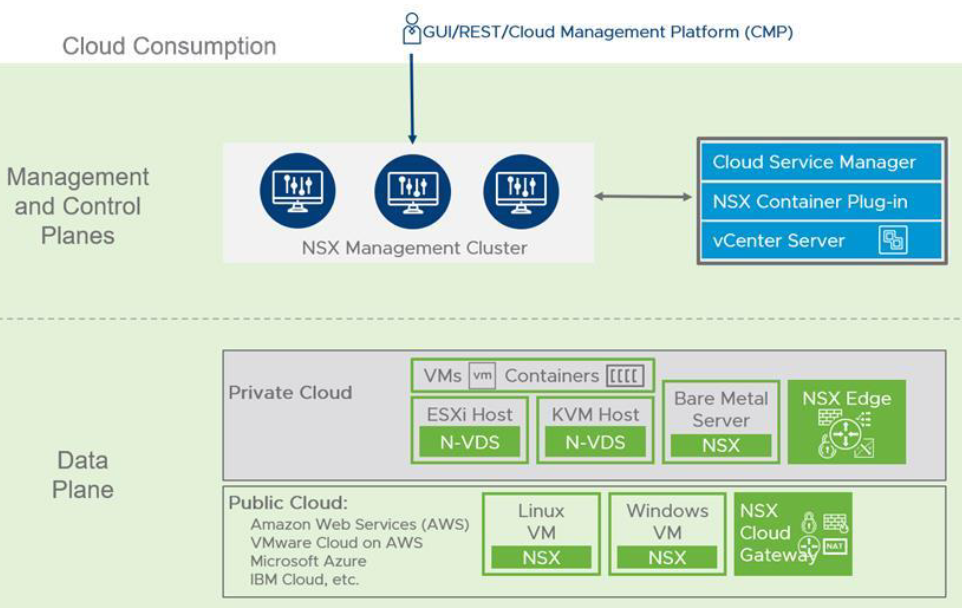

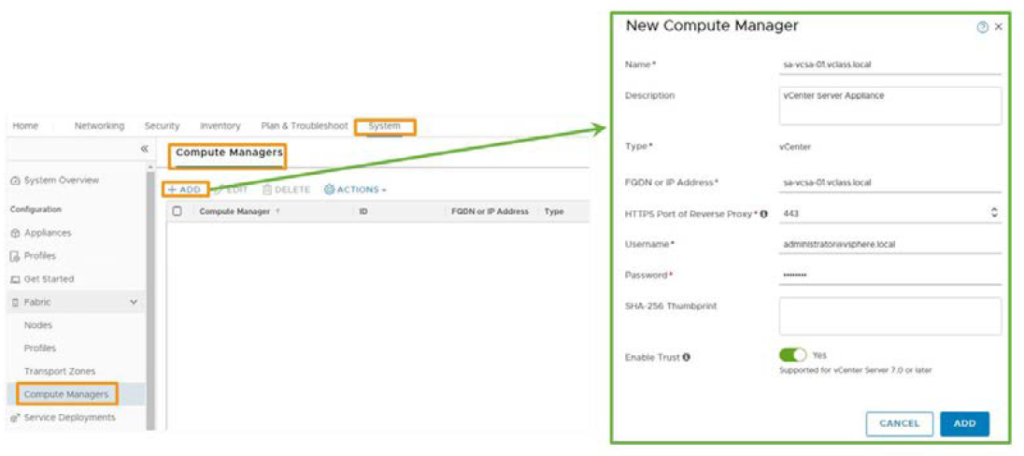

In previous blog post we started NSX-T implementation by deploying first NSX-T Manager. Before deploying other two NSX-T Managers we need to add a Compute Manager. As it defines by VMware, “A Compute Manager is an application that manage resources such as hosts and VMs. One example is vCenter Server”. We do this because other NSX-T Managers will be deployed through Web UI and with help of vCenter Server. We can add up to 16 vCenter Servers in a NSX-T Management cluster.

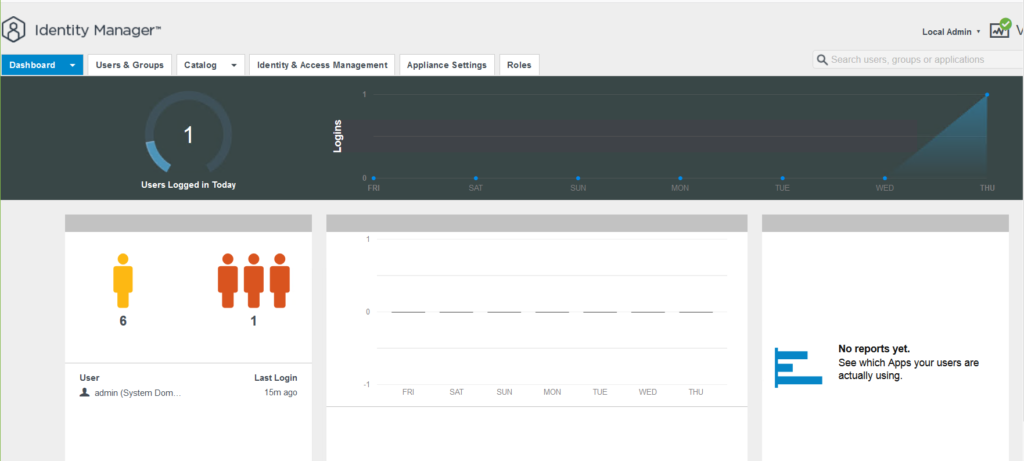

To add compute manager in NSX-T, It is recommended to create a service account and customized vSphere Role instead of using NSX-T default admin account. The reason behind defining a specific role is because of security reasons. As you can see in the below screen shot I created a vSphere Role call “NSX-T Compute Manager” with the required privileges. I use this Role to assign permission to the service account on vCenter Server.

Continue reading “Add Compute Manager to NSX-T 3.0”